Feminist AI Ethics Toolkit

Description

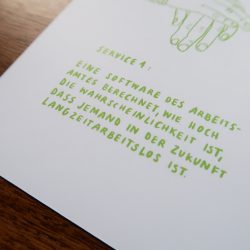

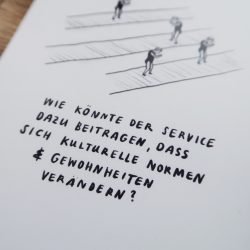

I created a workshop toolkit to discuss&create more awareness for the ethical implications of AI tools —according to social justice and intersectional feminist approaches. Based on utopian and dystopian scenarios and prompts about what might happen with AI technologies in possible futures, participants can reflect/create concepts for actionable countermechanisms together.What is the Topic?

When people decide over people, predetermined perceptions, stereotypes, prejudices and learned behavior patterns influence the process. In order to eliminate these biases, decision-making processes are increasingly being outsourced to algorithmic systems - in the belief that "neutral technology" can make more objective and non-discriminatory decisions than humans. Reality, however, looks different: Algorithmic decision-making systems reproduce sexist, racist and other discriminatory structures that prevail in society - or create entirely new possibilities of oppression and marginalisation. Algorithmic decision-making systems can reinforce the social injustice they are supposed to eliminate.

Why does it look like this?

The toolkit is a result of many iteration loops, which have shown how important a concrete exchange as well as a look into the future on this topic is. I got inspiration and feedback in interviews, held a co-creation session for the development process and tested the prototype in workshops. I believe that this toolkit can provide a good introduction to the discourse on fairness, social justice and new technologies. It enables people with different levels of knowledge and different disciplinary backgrounds to discuss what their desired futures might be based on concrete examples and open questions.

What is special?

So far, there are hardly any approaches to communicate the effects and concrete problems of AI tools to the most diverse people who are not involved in the technology, nor are there any approaches to focus on the social rather than the technical for technologists. When looking at who now shapes the profound design process of algorithmic decision-making systems, one has to realize that often it is not a designer. Those who professionally shape products and processes are hardly involved in this one. It is the designer’s chance and duty to become a mediator between technology and sociology. It is the work of a designer to identify different subject areas, to make the complex more understandable, to stay tangible, to prototype, to test early and, above all, to empathize with people and to recognize their needs.

What is new?

In the discourse on discriminatory algorithmic decision-making systems, the problem is often reified and singularly reduced to technical aspects, both in the industry and in many scientific publications - either for lack of awareness or to avoid having to deal with the far more complex societal problems. Furthermore, the analysis of algorithmic decision-making systems has so far focused solely on the current application of the decision-making system in question - and does not take into account considerations of consequences and unfair applications in the future. The toolkit addresses this gap and facilitates discussions on the social consequences of AI systems.